STL (Standard Template Library) adds containers and algorithms to the C++ language. There is no equivalent library in the C specification but there are a number of available libraries that provide common data structure and algorithm implementations which may be used in C programs. Once such library is gnulib which provides, amongst other things, implementations for common container data structures such as lists and trees. This post provides a quick comparison of the performance of a number of the gnulib list implementations against the performance of the STL list implementation. For this analysis, gcc v4.0.1 using -O2 optimization and the gnulib source from June 13th, 2009 were used.

The Benchmark

A very simple benchmark was chosen for the performance characterization. Two operations were measured. The first was the insertion of a large number of integers into a list, with the integers being added one at a time to the end of the list. The second was the searching for elements within the list. It is acknowledged that these are very simple tests and that any results are somewhat inconclusive in the absence of a larger and more thorough suite of performance tests.

STL list benchmarking source code:

// The insert code

start = clock();

for(uint32_t i=0; i<INSERT_ITERATIONS; ++i)

l.push_back(i);

stop = clock();

// The search code

start = clock();

for(uint32_t i=0; i<SEARCH_ITERATIONS; ++i)

find(l.begin(), l.end(), i);

stop = clock();

Gnulib list benchmarking source code:

// The insert code

start = clock();

for(i=0; i<INSERT_ITERATIONS; ++i)

gl_list_add_last(list, (void*)i);

stop = clock();

// The search code

start = clock();

for(i=0; i<SEARCH_ITERATIONS; ++i)

gl_list_search(list, (void*)i);

stop = clock();

Both STL and gnulib provide a number of container implementations. For this analysis the focus is on the list container implementations. Although gnulib provides a number of “list” implementations only the gl_linked_list container is a true linked list and the comparison between this and the STL list is the most relevant comparison. However, for illustrating the comparative performance of the other gnulib list implementations, four gnulib list implementations were selected for benchmarking:

- gl_linked_list

- gl_linkedhash_list

- gl_array_list

- gl_rbtree_list

The comparative performance of the relevant STL containers that are most similar to the gnulib list containers other than the gl_linked_list will be provided in a follow-up post.

Finally, the tests were run on a Macbook running OS X 10 with a 2.4GHz Intel Core 2 Duo processor with 3MB L2 cache and 2GB of RAM.

Results

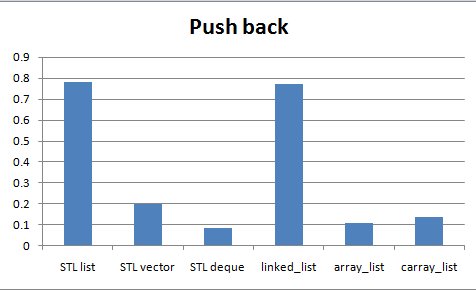

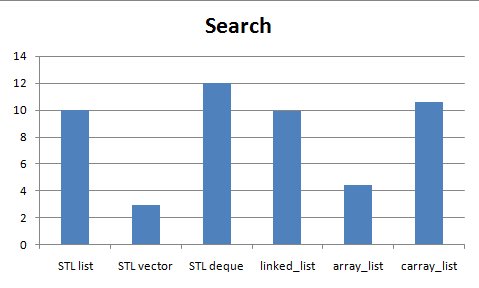

The results for each algorithm were normalized with respect to the STL list results. So, with this normalization, a number less than one means that this is faster than the STL list implementation and a number greater than one means that this is slower than the STL list implementation.

The normalized results are shown in the graph below.

Based on the above results, the STL list implementation and the gnulib gl_linked_list implementation are very similar in terms of performance with the gnulib implementation being very slightly faster. The gl_array_list implementation offers the best insertion performance (>5x faster than the STL implementation) and the gl_linkedhash_list implementation offers the best search performance (~5000x faster than the STL implementation).

Conclusions

The pure linked list implementations of both STL list and the gl_linked_list are almost identical in performance for both tests.

While the above results show the relative performance of the STL list against the other gnulib container implementations, this is not a fair and complete comparison as the STL offers other sequence containers that have similar implementations to some of the gnulib containers analyzed here. A follow up post will fill out the comparison started here and include STL vectors and sets into the comparison.

EDIT: The original intent of this post to compare an STL list against the full suite of gnulib list implementations was deeply flawed. The gnulib “list” interface is closer to the more generic STL sequence interface and a more correct and complete comparison would be to compare the gnulib containers against the STL containers. This will be a focus of a follow-up post. I’ve edited this post to clarify the comparison in order to avoid confusion. While I realized my mistake shortly after publishing the post, I was happy to see that this was also picked up by several readers – so many thanks to those of you who provided comments for keeping me honest!