Graphviz is an excellent and free graph visualization program that provides a simple way to generate graphs for pretty much anything with a variety of layouts. The Graphviz layout programs take descriptions of graphs in a simple text language. These descriptions can be created and edited manually but are more usually generated programatically – especially for large data sets.

This post provides an example of using the Visitor pattern to generate a directed graph visualization of a trie using the Graphviz dot layout manager. Full python source code listing of this example is available here.

A Trie class is used to represent the trie data structure. This class is composed of a set of nodes representing the trie nodes. These nodes are anchored via a root node. The code for adding data to the trie is omitted for clarity but is available in the full source listing.

class Trie:

def __init__(self):

self.root = Node()

def walk(self, visitor):

self.root.walk(visitor)

From a visitor pattern perspective, the Node.walk() method is used to traverse the nodes and to pass each node encountered to the visitor object.

class Node:

def walk(self, visitor):

visitor.visitNode(self)

for nextNode in self.children.values():

nextNode.walk(visitor)

The GraphvizVisitor class is a Visitor subclass that generates the appropriate dot language representation for each visited node.

# Visitor interface

class Visitor:

def visitNode(self, node):

pass

# Visitor subclass that generates Graphviz dot file contents.

class GraphvizVisitor:

def __init__(self, dotGen):

self.dotGen = dotGen

def visitNode(self, node):

if node.isOutputNode:

dotGen.setNodeProperty(node.name, 'shape', 'doublecircle')

for edge, nextNode in node.children.items():

dotGen.addLink(node.name, nextNode.name, edge)

The dotGen object is an instance of the DotFileGenerator class which is used as a simple utility class to generate the dot file.

class DotFileGenerator:

def __init__(self, name):

self.name = name

self.outf = open(name+'.dot', 'w')

print >> self.outf, 'digraph %s {' % (self.name)

print >> self.outf, 'trankdir="LR";'

print >> self.outf, 'tnode [shape = "circle"];'

def setNodeProperty(self, nodeName, property, value):

print >> self.outf, 't%s [%s = %s];' %(nodeName, property, value)

def addLink(self, lhs, rhs, label):

print >> self.outf, 't%s -> %s [label = %s];' % (lhs, rhs, label)

def close(self):

self.outf.close()

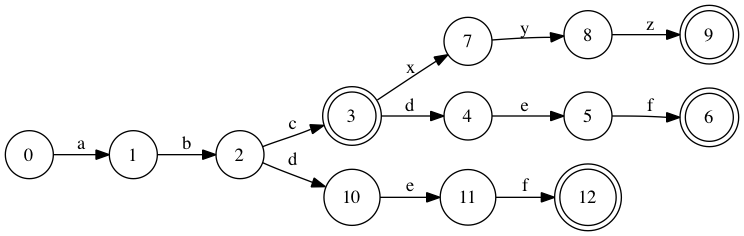

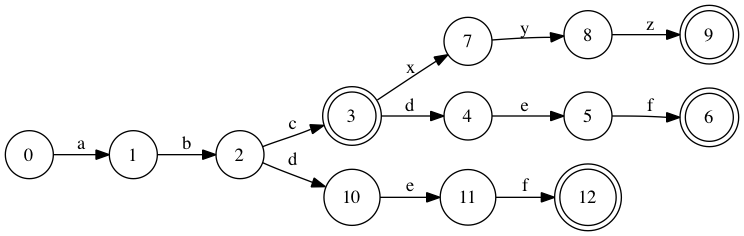

Consider the following example to illustrate the generated output:

# Build a trie

trie = Trie()

trie.addPattern("abc")

trie.addPattern("abcdef")

trie.addPattern("abcxyz")

trie.addPattern("abdef")

# Generate the dot file contents by walking the trie

dotGen = DotFileGenerator('trie')

visitor = GraphvizVisitor(dotGen)

trie.walk(visitor)

dotGen.close()

This produces the following dot file contents:

digraph trie {

rankdir="LR";

node [shape = "circle"];

0 -> 1 [label = a];

1 -> 2 [label = b];

2 -> 3 [label = c];

2 -> 10 [label = d];

3 [shape = doublecircle];

3 -> 7 [label = x];

3 -> 4 [label = d];

7 -> 8 [label = y];

8 -> 9 [label = z];

9 [shape = doublecircle];

4 -> 5 [label = e];

5 -> 6 [label = f];

6 [shape = doublecircle];

10 -> 11 [label = e];

11 -> 12 [label = f];

12 [shape = doublecircle];

}

After passing this dot file through Graphviz, we get: